In database replication, “immediately” never feels quite fast enough for your users, does it?

“We replicate transactions from production databases in real time,” you tell them. “You’ll see the change immediately, as soon as the transactions hit.”

They watch the screen. They refresh it a half-dozen times. They keep seeing the same values as before.

“What am I doing wrong?” they ask. “When will I see the all the latest transactions?”

They’re doing nothing wrong, of course. They’ll see the new transactions once the database latency is done with them.

Why database replication? Why database latency?

Smart companies use database replication for migrations, data staging, analytics and business intelligence (BI). They replicate from their production database to a target, and then run their users’ queries and reports against it. That way, there’s less interference and traffic on production databases.

But you can’t get away from latency, which is the time it takes for data to get from the source database to the target database. Your users lose time and productivity. Your analysts and BI users never know quite as much as they should because your information is always a little bit stale.

The lower the latency, the faster you can see your data in your BI. Ideally, you would reduce database latency to almost zero so that your users could see their data in near-real-time.

If automated database replication is such a good idea , then why do I have to deal with days or hours of database latency?

Because you’re doing it wrong.

Do you replicate manually? If so, you’re just asking for latency – hours or even days of it. Same with batch processing and Extract, Transform and Load (ETL), the traditional methods of creating a replica of your databases. Big-time latency is inherent to all of them because they depend on manually posting batches of updates from source to target once a day or every few hours.

You could run an ecommerce or mobile campaign on Tuesday and not be able to measure effectiveness until Wednesday. That’s a long way from “immediately.”

Even most options for automated database replication involve a system for manually extracting batches at predetermined intervals, which means that it’s not so automatic. If you replicate too often, you’ll place too many demands on your database, overloading it and hampering your users’ productivity. And you still have to load your updates after extracting them, which takes time.

Do you like having a target database that’s always hours or days behind your production database? Then stick to manual, batch and ETL replication.

But no matter which approach you take to replication, you’re going to have database latency. It’s a matter of tuning. If you follow a manual approach and update every 60 minutes, your latency will be one hour plus the time it takes you to replicate the changes, so probably 70 minutes. The longer you wait to capture and post batches, the greater the latency, because there are more updates.

How can I reduce database latency in my Oracle environment?

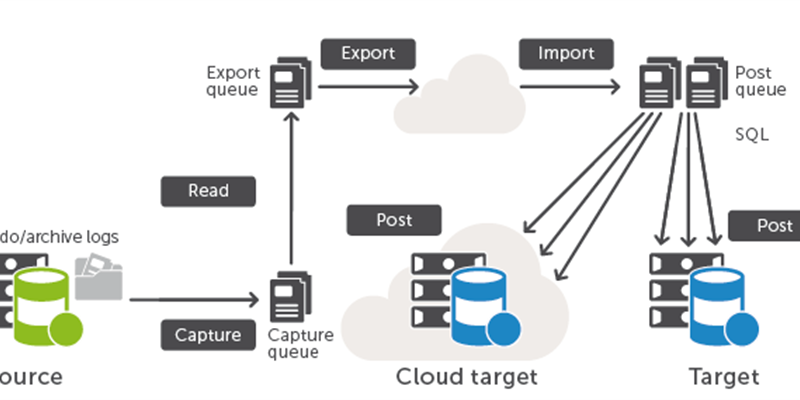

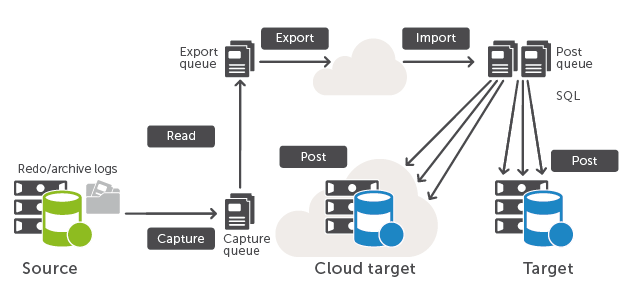

Get away from batch processing and ETL replication. The better technical route is to capture data in real time from the Oracle redo logs, and replicate only those changes, as shown in the image below.

An even more important technique is to extract data directly from the redo logs before the transaction has been committed and replicate it to the target database. The upside is greatly decreased latency and near-real-time replication; the downside is the very low risk that the transaction will be revoked or modified.

You’ll still see database latency, but it will be on the order of seconds or even fractions of a second.

At that level, you can also tune the factors that contribute to latency. Say that activity spikes at certain hours of the day in response to peak user activity. You can allocate more memory and queue up more changes for less frequent write operations to disk. You can allocate more CPU cycles or processing time, optimize the traffic going across the network or store the target database on a faster disk.

Meet Your Data-Sharing Needs Now that Oracle Streams Is Deprecated

Oracle had a replication product called Oracle Streams designed to reduce database latency. We’ve written a tech brief called Meeting Your Data-Sharing Needs Now that Oracle Streams Is Depreciated to explain replication and latency in the Oracle environment. Use it to refine your replication strategy, reduce latency in your Oracle environment and get your users a lot closer to “immediately.”