Digital transformation is everywhere

Organizations are changing fast to keep up with business demands. Digital transformation is being driven by the business, but IT (central and business-run) is making it happen. As they take on new database platforms, analytics tools and other digital transformation initiatives, an organization’s ability to empower their people and processes with data can be a game-changer.

One such digital transformation initiative is the adoption of new types of database platforms - within IT and in business areas of the organization. This is not new, but increasingly common. As of 2020 (according to Unisphere research of database owners and managers), over 80% of DBAs manage more than one type of database. And databases are being migrated to the cloud at increasing rates. By the end of 2022, research shows that 75% of all databases will be in the cloud. That includes critical production databases used to drive the business.

Risks of managing multiple database platforms

Managing multiple database platforms in this environment of transformation is a key factor in determining success of the business goals of a company.

So, what are the risks to the business, and to the success of strategies like cloud migration and data diversity? Here are five common risks that need to be mitigated to ensure success.

Database platform diversity overwhelms operations/admins

New database platforms are being chosen by IT in greater numbers. But they are also being chosen by lines-of-business architects. The business-owned IT that offers so much agility to application owners and others responsible for keeping up with customer-driven changes in the business also can compound problems for DBAs and others in IT operations who must one day manage those new database platforms. Skillset gaps are becoming a huge concern to CIOs and other leaders as the velocity of change and the sudden influx of new database platforms overtake the operations teams’ ability to manage:

- Performance

- Availability and recoverability

- Reliability/consistency of the data

- Access

Performing all these tasks becomes much harder when databases are of a type in which the team hasn’t yet collectively built expertise.

Costs go up

A fallacy of cloud migration strategy is that it will automatically save a significant amount of money for the organization. It should save money, but planning is necessary to realize the possible financial benefits of:

- Not owning one’s own hardware; and

- Buying just the cloud service with only the processing power necessary

Planning is necessary well before the actual migration of a workload since moving workloads and data to the cloud without proper planning can easily lead to over-allocation. This is especially true if cloud migration decisions are made to “cover the worst case” – allocate plenty of resources in the cloud service so that there is virtually no chance of CPU, memory or storage constraints affecting the performance and availability of a database or application.

Of course, buying more processing power than is necessary for a database’s workload and the surrounding services that accompany the database on virtual machines is going to cost the organization money needlessly. And potentially a lot of money, depending on the number of virtual machines to be migrated.

Cloud sprawl becomes a management nightmare

What happens when adding cloud instances is so easy that people all over the organization create them? Cloud sprawl happens. Rather than asking IT for a database platform on which to run a new instance, lines-of-business architects, application operations and others choose to do it themselves. That creates issues down the road when IT is asked to support these cloud-based databases after the fact. This adds to IT’s burden because:

- Self-provisioned machines might not be well-planned in terms of resource allocations and best-fit cloud services

- Poorly optimized database workloads may experience performance problems or consume too many resources

- The sheer number of additional cloud instances represents an unplanned influx of work for an already taxed IT operations team

Moving too much processing to the cloud

Databases residing on virtual machines (instead of physical servers) can be moved to the cloud these days in any number of ways. Migration tools are abundant in the market. But being able to move them doesn’t mean that every virtual machine should be migrated to the cloud. Selectivity is key – every organization needs processes in place to ensure that virtual machines housing databases are prioritized for migration based on the value that migration will bring, and the expected ease of the migration. They should also verify that any migrated database workload is running within the parameters of allocated CPU, memory, and storage, and both the database’s workload processing demands and the resource allocations for the virtual machine itself should be optimized to reduce unnecessary processing power (costs) once in the cloud.

And if the database’s virtual machine ends up in a poorly fitted service tier in the cloud, what becomes of performance? We all know that databases feed on CPU and memory. If those become too scarce in a particular cloud service, do you just plan to display a sad message on your applications’ UI dashboards and shopping carts: “Out to lunch; come back later?” Nope – you really must optimize those workloads first, ahead of the cloud migration. Then, the operations folks can match up the best fitting cloud service tier to the workload - virtual machine(s) - being migrated, at the lowest possible cost that still supports optimal service delivery.

Performance and service levels degrade

What if a database that performed well on-premises suddenly slows down or experiences errors in the new cloud environment? Is there a slow network operation, or a resource that’s being stressed by over-consumption on a host? Proactively monitoring the performance of virtual environments and database platforms can provide answers into why a migrated workload isn’t running as well in the cloud.

But monitoring only after the move to the cloud isn’t sufficient for several reasons:

- If performance is bad in the cloud, do we know how it compared when the database was on-premises? If not, it will be awfully time-consuming and difficult to prove that moving to the cloud caused the slowdown. Repatriation too quickly could be a mistake if there isn’t a clear picture of performance when the database was on-premises to begin with.

- Finding root cause of a performance problem, whenever possible, is the holy grail of database pros. Solving the problem so that it won’t recur is the kind of heroics that DBAs live for. They are problem-solvers. But database monitoring and workload analytics after the migration to the cloud can only help to a degree. A much more efficient, dependable and accurate picture of why a performance problem has now appeared is possible if the baseline of performance back in the on-premises environment is available to compare against. What’s different between the two monitoring samples could quickly tell the story of what’s causing the problem.

- Are service levels that were achievable on-premises still possible now that the database platform is in the cloud? How can we be sure when we’re “flying blind” about what might be different in the cloud than the on-premises environment regarding performance levers on the database, for example. Do we even know for sure that resource allocations were chosen on the cloud service tier based on a reality check back on-premises? If not, IT has difficult discussions coming up with application owners and business areas based on guesses; educated guesses, maybe, but guesses nonetheless.

Foglight for database monitoring helps mitigate these five risks on multiple database platforms

Foglight for Databases will help you efficiently manage your diverse database platforms spread around your environment on virtual machines, even as they spread into hybrid cloud services or remain on-premises.

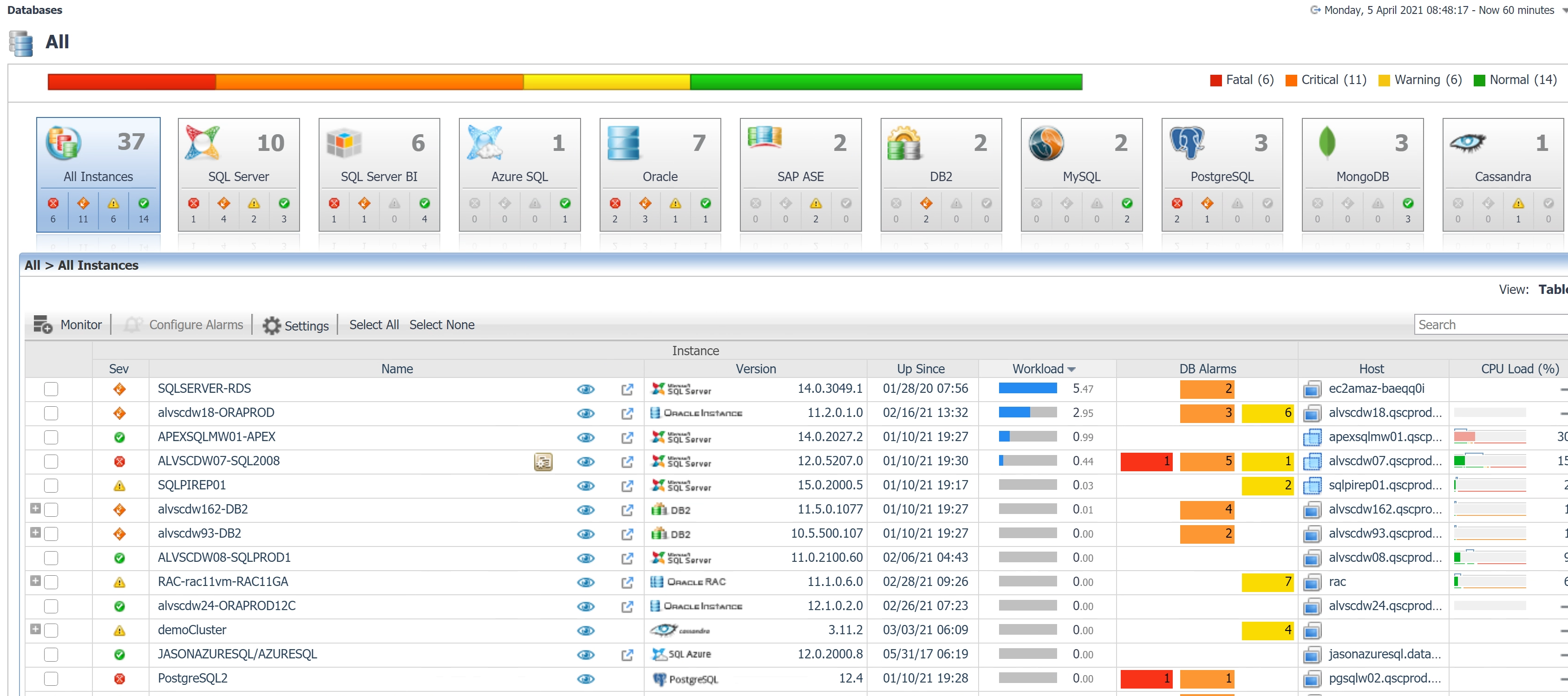

Fill skills gaps through consistency when new database platforms demand attention

Foglight for Databases is peerless in its coverage of multiple types of database platforms: traditional relational like SQL Server, Oracle, DB2 and SAP; open source relational like MySQL and PostgreSQL; cloud data warehouse, Redshift; and a growing list of NoSQL platforms. All in one product, all with easily-deployed monitoring that doesn’t add to your management burden, but provides needed consistency. And, using a web interface that allows navigating quickly to the database instances that need the most attention, and investigating problems in the past as well as those that are happening right now. That consistency is key to allowing database pros to solve problems faster, even without expertise on a particular database type.

Don’t pay for resources in the cloud that you’ll never use

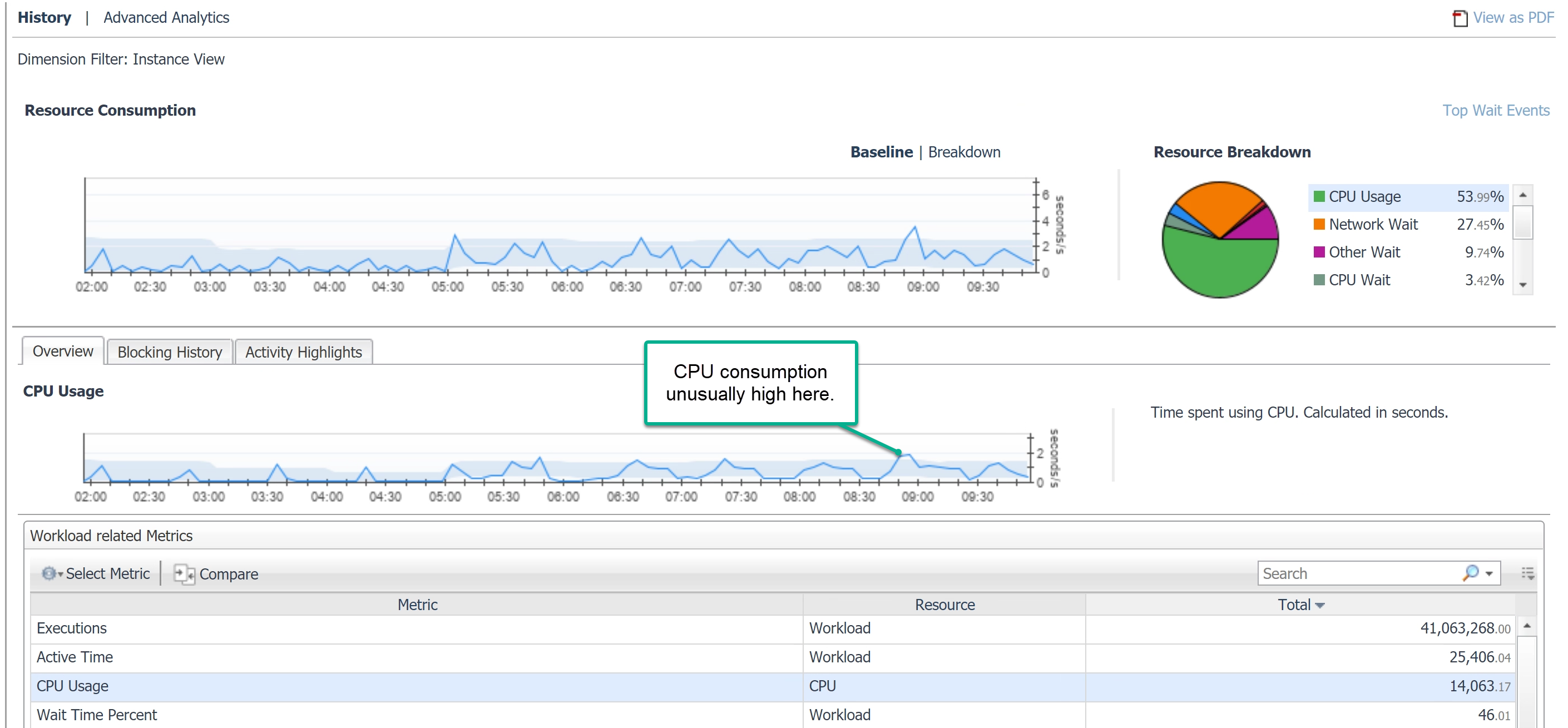

Foglight for Databases won’t stop you from selecting a too-large cloud service tier, but it sure can help you better judge what the “high end” of resource consumption for a database workload will be by showing the trends of performance and resource consumption over time.

By knowing the baseline of performance and resource consumption at “normal” times, as well as at peak demand times, the migration target environment can be chosen more wisely. Only pay for resources you really think you’ll need.

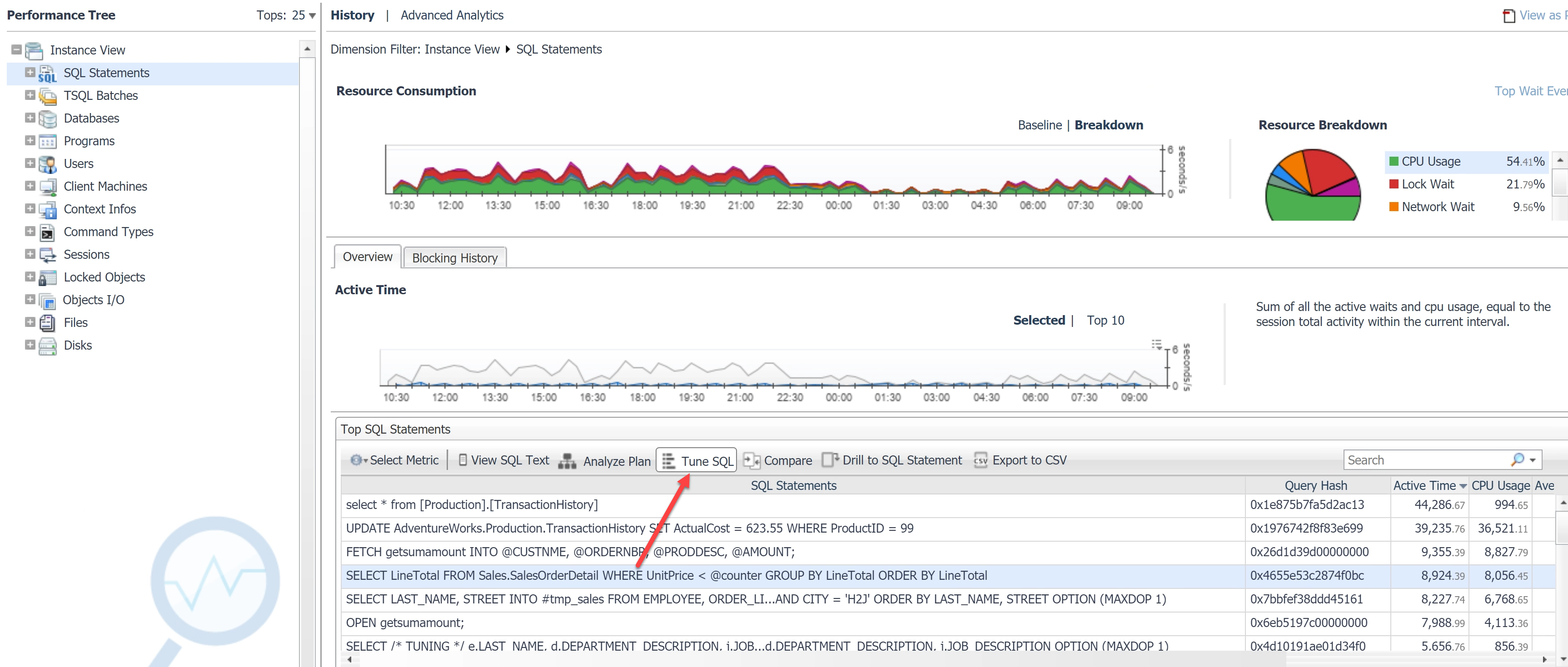

Monitor performance of database platforms before instances migrate to the cloud – and after

Monitoring database platforms and their workloads is something Foglight for Databases does very well. Take advantage of all it can provide, starting with workloads that are about to be migrated to the cloud. By monitoring them, and knowing whether performance is adequate, amazing or disappointing, you’ll know exactly what needs tuning and what should be optimized before moving it to the cloud.

Then, get a new baseline of performance. Know exactly how the database responds at peak usage times, etc. Know what sorts of wait events occur. If you can tune the database, the instance or the SQL even more, you’ll have that workload well documented, as well as running at its best. Then, after it migrates to the cloud, keep monitoring it, and you’ll be in a much better position to identify causes of problems if/when things don’t go quite the way they did when the database was on-premises.

Summary

As your organization grapples with providing support for new database platforms – perhaps a mix of relational, open source relational, NoSQL and data warehouses, some risks need to be mitigated to avoid organizational “data pipeline” clogging.

Databases need to be monitored in a consistent way, visualizing similar historical trends and health indicators both real-time and historical, even for many types of databases that have been adopted in the organization. If a different monitoring product needs to be used for each type of database, that consistent look and feel and consistent collection of important metrics from them all, will be missing and will compound the challenges your operations teams face every day.

To add to the urgency, digital transformations like cloud migrations, the importance of analytics to organizational decision-making, and fast application of new features and change delivery (DevOps) all demand 24x7 uptime and availability of every critical data source, no matter where they reside.

Foglight mitigates important risks to the success of these efforts:

- Overwhelmed operations teams and skills gaps

- Cost overruns

- Database and cloud sprawl

- Overallocation of cloud services, wasting real money

- Performance degradation

For more information, please visit the Foglight for Databases product page.