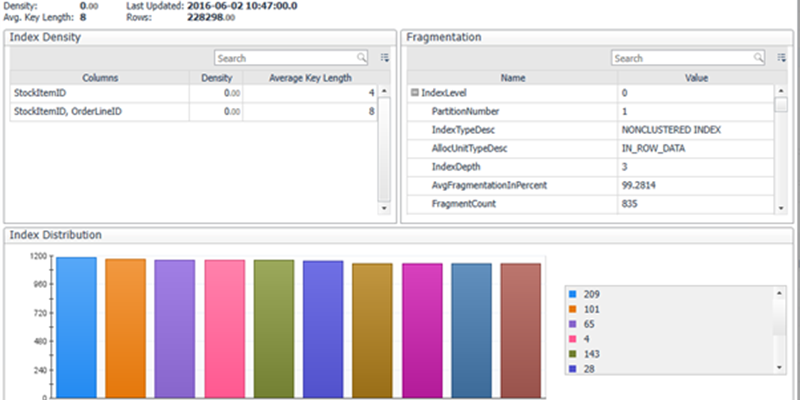

I received a question today from a customer about alerting on index fragmentation within Foglight. We don’t provide this feature out of the box, but we could add this as a custom feature.

So, to begin with, let’s consider how we find this information.

A quick web search finds several options such as

https://www.mssqltips.com/sqlservertip/1708/index-fragmentation-report-in-sql-server/

or

https://www.sqlshack.com/how-to-identify-and-resolve-sql-server-index-fragmentation/

SELECT top (10) S.name as 'Schema',

T.name as 'Table',

I.name as 'Index',

DDIPS.avg_fragmentation_in_percent,

DDIPS.page_count

FROM sys.dm_db_index_physical_stats (DB_ID(), NULL, NULL, NULL, NULL) AS DDIPS

INNER JOIN sys.tables T on T.object_id = DDIPS.object_id

INNER JOIN sys.schemas S on T.schema_id = S.schema_id

INNER JOIN sys.indexes I ON I.object_id = DDIPS.object_id

AND DDIPS.index_id = I.index_id

WHERE DDIPS.database_id = DB_ID()

and I.name is not null

AND DDIPS.avg_fragmentation_in_percent > 0

ORDER BY DDIPS.avg_fragmentation_in_percent desc

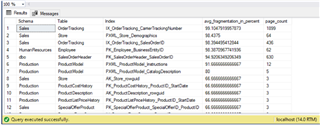

Would give us

So why not just let Foglight run this command once a day?

Well, it depends on a few considerations:

- What if you have a database with hundreds of large tables? The query would take a long time to run and have an impact on the performance.

- What if you also have an instance with lots of databases, with lots of large tables.

- When would be run this query? Overnight or during the day?

- What are we looking for? How many tables are fragmented (count) and over a particular threshold? Or do you want to select all the table names along with the fragmentation percentage.

Basically, the answers depend on your requirements and your environment. I think you need to review each case individually rather than run this on every database in your environment.

So, a good rule of thumb is to consider what you would do with this information. If Foglight collected a count metric of the number of tables with fragmentation over 90% against the database & schema name, would that be enough to alert the DBA who could then investigate. Certainly, the action of rebuilding an index needs to be considered, such as when and what the impact might be.

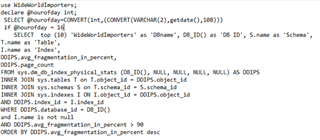

There is also another drawback that the user defined query feature in Foglight doesn’t have a scheduling feature, such as collect this metric at a particular as we shall see. I’ve got around this by making sure the query run by the agent can only run at a particular hour of the day.

The query looks like this:

I’ve coded it to only run against one particular database and only at 4pm each day. To avoid overloading Foglight with too much data, I’ve specified only the top 10 indexes are collected which are > 90% fragmented.

Here are the steps to load this into Foglight.

Connect to the target instance and go to settings.

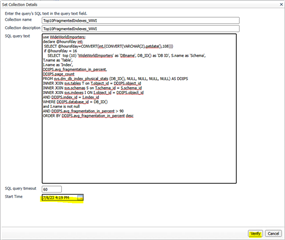

Click Set details

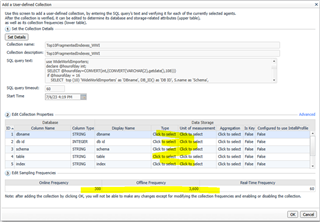

Test the query first, if it does take a long time you will have to increase the 60 sec query timeout setting.

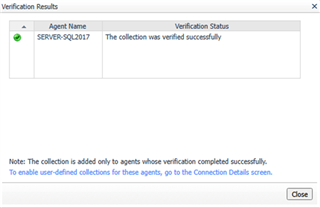

Make sure you click a start time in the future and you should get this on Verify.

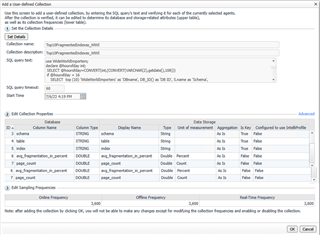

We need to tell Foglight what to do with the data, and, how often to sample. Note that there are 7 row, 2 are not shown below.

Click on type or unit of measurement to set as follows:

Then click Save Changes. Note this custom query could be cloned to other instances but I’m proposing that you use this feature wisely and only on specific databases.

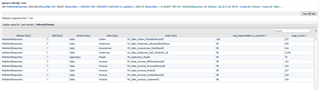

If you then go to the User Defined queries dashboard (and assuming you have left this to test overnight or during the hour you expect) you should expect something like this:

At least it would on a WideWorldImporters database

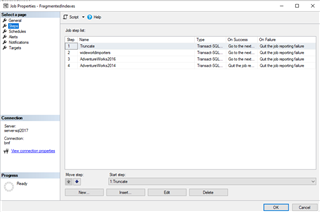

Another alternative method would be to put this query in a SQL Agent Job (one step for each database) and for alerting purposes have Foglight User Defined queries display or alert on the output. For example truncate a work table, then here three jobs to capture the data in each database and insert into the work table.

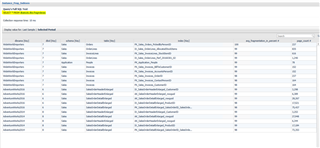

The output would look like this:

Foglight could alert if there are any rows in the table (ie. Something needs looking at) and the DBA could navigate here to see which indexes need rebuilding.

In Summary focus on what you need to address your fragmentation problems and don't just collect data because you can, it would either cause you performance problems on the instance or needless overhead on Foglight.