IT professionals today have a great deal on their plate. They are responsible for servers, applications, storage, networking, security, etc. Throw in a Cloud initiative, BYOD, managing a diverse array of endpoints like smartphones and its easy to see why this can be a challenging profession. Oh, I almost forgot, you need to do this with less money, fewer resources plus you need to innovate and help move the company forward to the leading edge of its field.

After all that, you still need to deal with backup of your data. For many companies, backup runs in the background or overnight. It’s like an insurance policy – you have to pay for it but hope you don’t ever have to use it. Even if your business is not located in “Tornado Alley” or in an area susceptible to floods or hurricanes, disasters can and do strike. I have met with or spoken with customers who have had roofs leak into data centers, electrical fires and even the air conditioning fail; all which leads to a major IT event.

In describing best practices around backup I often refer to this in terms of 3-2-1.

- 3 – referring to 3 copies of your data

- 2 – meaning your data should reside on 2 disparate platforms

- 1 – having one of your data copies offsite

Let’s look at each of these in more depth. If we break down the 3 copies of data, we are referring to (1) Your production copy of data that is running live (2) a full copy of that data on some form of back target, typically disk and (3) a replicated copy of that backup data somewhere else which could be in the form of tape or a deduplication appliance like the DR 6300.

Why should you have data on 2 disparate platforms? The answer is risk. Backup is all about mitigation of risk and if your backup data is sitting on the same platform (even if its physically a separate device) as your production data, that’s additional risk. Specifically, let say you have a production SAN and you store your backup data on an older model of that same vendor’s SAN. If you have a firmware issue related to those units, you are more exposed than if your backup target was entirely different than the production environment. The other advantage to avoiding this is that you can also realize significant benefits using a purpose built backup appliance like faster injest of data, client side deduplication and optimized replication.

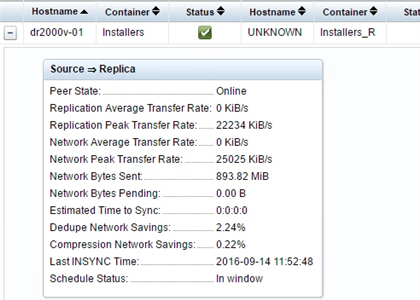

Having a copy of your data is a must. Offsite can be defined as a secondary location, a cloud target or co-location site. Even if you are writing to tape, and storing them elsewhere, that’s better than nothing. Today, replication of large volumes of data is not near as daunting a task as it used to be. I often recommend to customers our line of Quest DR deduplication appliances because they are agnostic of backup software and replicate only reduplicated delta changes of data. Put another way a 20TB back up replicate could take many days but if that same data is 95% common at the sub block level as the last backup the appliance received, you are only sending 1TB of unique data which is retrievable as 20TB of data on your DR site. You can see from the below snapshot detailed replication statistics that are available. That snap is from a newly created replication peer but I typically see savings exceeding 80% meaning that 80% of what’s backed up, is not sent across the WAN.

In my next blog, I’ll be spending some time on database backups. Different methods, best practices and what options are available to you.

Learn more about our deduplication appliances and how they can solve replication challenges, visit https://www.quest.com/products/dr-series-disk-backup-appliances/