As hybrid cloud becomes more prevalent, the challenges of cloud adoption and using multiple cloud platforms are compounded as complexity increases. In this blog, we’ll examine some of the major risks an organization and its leaders face when hybrid cloud – a mixture of one or more cloud providers, one or more cloud configurations and on-premises systems – becomes reality.

Hybrid cloud presents risks of the unknown

Risks associated with a hybrid cloud environment are not that different from risks that any business or area of a company may face, including loss of control over costs and reduced quality of service. However, these risks are magnified by hybrid cloud and are ultimately the responsibility of the CIO or head of IT to manage and mitigate.

Consider this: hybrid cloud is new to many organizations so there are many unknowns regarding how best to control costs, how best to provision and how to ensure that all teams are aligned when it comes to making productive and cost-effective use of the various cloud environments available to them.

It is worthwhile now to define risks in the context of our topic, especially risks directly impacting C-level IT leaders.

Risks to CIO strategy in hybrid IT

Complexity, unknowns and hard-to-find skillsets are common when hybrid cloud is present in an organization. These are not the friends of C-level IT leaders with a strategic vision of IT services. CIOs want, above all, to see their strategies become reality.

A risk to the CIO is that his or her strategic plans turn out to be based on inaccurate assumptions, requiring adjustment. Another risk is that because all of this is so new, the strategy cannot succeed without major investment in OpEx or CapEx, and significant unplanned effort by IT personnel. Often, one or more of these reasons is the culprit:

- Cloud costs skyrocket as quality of service is kept high.

- Unplanned introduction of cloud platforms by teams outside of IT become a burden on IT.

- Performance problems that affect application and customer experience turn out to be too difficult to detect quickly or diagnose accurately to avoid recurrences.

- The data pipeline is not consistently flowing, so data is not available for business decision-making.

Let’s dive into these one at a time. Then, we’ll look at ways to remove the guesswork and solve these CIO headaches.

Cloud cost explosion

Of all the things hybrid Ops is meant to deliver, the ideal is to create accurate cost models that help IT and application teams decide which workloads should, get moved to the cloud. CIOs love cost models and cost control, so this is the holy grail.

However, costs can get out of control quickly in hybrid cloud environments because:

- Few people in the organization understand the ramifications of choosing certain cloud configuration options or billing choices.

- Workloads are using more compute power or storage than imagined.

- Workloads get moved to cloud offerings without cost modeling beforehand.

What’s needed is a way to determine the expected resource consumption of workloads ahead of time. Then, to apply those to cost models to predict the costs of workload resource consumption in the cloud. Then, and only then, informed rational decisions can be made about moving to one cloud vendor/configuration over another or moving to the cloud at all.

DBAs and virtualization admins/engineers using a robust performance monitoring tool should be able to make a meaningful contribution to these cost models.

Unplanned cloud sprawl (“accidental hybrid”)

Application teams and other non-IT entities may introduce public cloud virtual machines into the organization. Sometimes called “accidental hybrid cloud,” this approach carries risks that can obliterate plans and CIO strategy. One thing is for sure, hybrid cloud requires planning – lots of planning.

Hybrid Ops exist in part to ensure that planning happens, to put in place standard practices for provisioning cloud environments, securing access, training personnel, controlling costs and many other best practices. Maybe it’s obvious, but skipping those considerations is a recipe for a real disaster. The lack of communication that usually accompanies these types of cloud sprawl often contributes to a disorganized response to performance issues, unbudgeted costs and strain on IT operations teams who are asked to help with things like databases that have been deployed on Azure or AWS, for example.

So, which of our IT roles are affected? DBA, Virtualization Engineer, Cloud Engineer? Absolutely. CIO, yes – uncontrolled costs and unplanned burden on operations teams affects the CIO’s strategy. It upends plans and can portend poor quality of service.

Performance problems are happening…somewhere

In a hybrid cloud environment, risks abound for application and infrastructure performance, such as:

- Performance is inconsistent. Is someone monitoring performance on AWS, Azure, VMWare or Hyper-V on-premises, etc.? How about on SQL Server, Oracle, Postgres, MySQL, MongoDB or across your entire hybrid IT environment?

- SLAs are not being met. Maybe they’re unrealistic or they need adjustment, but it’s a red flag demanding attention when it happens – especially for customer-facing applications.

- Poor user experience. Frustrated customers who have a choice go elsewhere.

Data pipeline isn’t flowing

The data pipeline is just as it sounds: a transmission channel for data within the organization. The pipeline starts at data collection, then storage, processing/transformation, access and finally “data intelligence” – whether that’s mining, analytics, machine learning or simple reporting. The data is being used in productive ways.

So, if something happens that stops the data flow in the organization, guess what: frustration and complaints and accusations are going to be the way of life for the DBA, DBA manager and CIO until the pipeline is turned back on.

What impedes data flow? There are many culprits:

- The complex hybrid infrastructure is poorly configured

- The relocation of data and database workloads was done without adequate planning

- The hidden multitude of problems that arise in poorly tuned databases, poorly sized virtual machines or monster database queries

- Availability problems were not sufficiently dealt with

All of these can cause the pipeline to shut down, but it could be a significant amount of time before anyone figures them out. Turning it back on becomes an urgent priority for IT.

Summary of CIO-level risks

These aspects of performance – response time, availability, SLA attainment – are all critical factors in measuring that CIO top-of-list risk: quality of service.

Risks abound in hybrid environments for obvious reasons: more platforms, multiple clouds and lack of skills. These make managing the performance of databases and the workloads running against them extremely difficult.

Timely detection of problems on the database or in the infrastructure, is key. Then, investigation and diagnosis of root cause are what bring the solution within grasp. Resolve the problem, and your performance monitoring tool has paid for itself, or is well on its way to doing so.

Diminishing hybrid risks

Let’s revisit each of the four reasons that a CIO strategy might fail in a hybrid environment, examining how advanced performance monitoring tools like Foglight can diminish the risks.

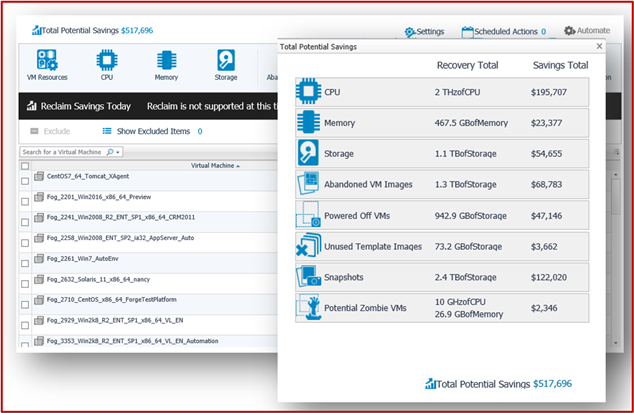

- Foglight addresses the cloud cost explosion risk with built-in cost optimization, cloud migration and resource optimization capabilities, as well as its deep and wide performance monitoring capabilities.

Optimize workloads before migrating to the cloud

Know your exact cloud costs before migrating

- The introduction of cloud instances by application teams should not deter IT from focusing on the success of all workloads and applications. Nobody is going to care how they were provisioned or whether standard processes were used to create them. Cost and quality of service are what matter most.

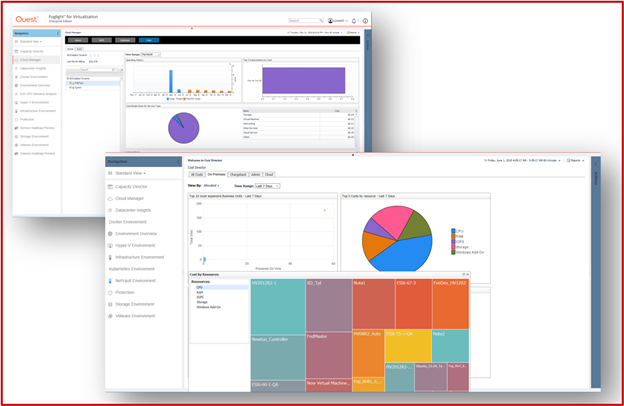

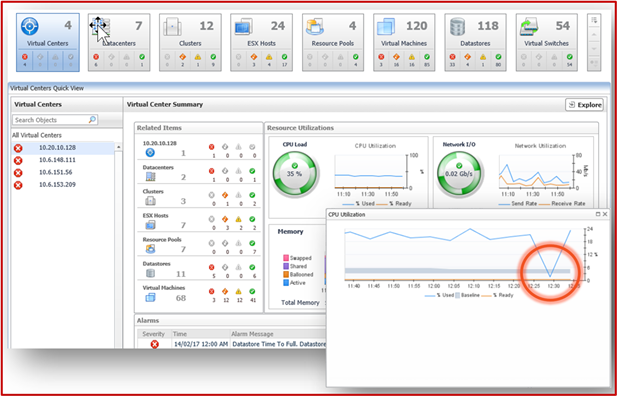

Using Foglight for database monitoring and diagnostics, and Foglight Evolve for virtual machine optimization and remediation of resource allocation problems frees up time to identify and manage all these environments.

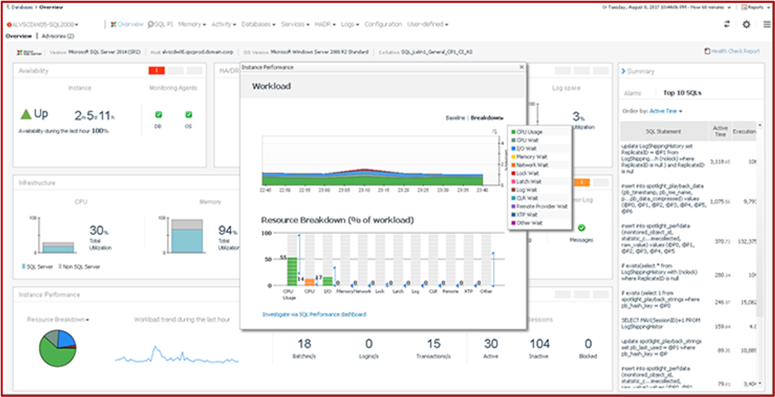

- Foglight excels at performance monitoring of many database platforms deployed in a variety of virtualization scenarios or cloud offerings. Mean Time to Resolution (MTTR) is the measurement of success in diagnosing and resolving an application performance problem. It means increased quality of service if problems can be resolved before they substantially affect application users. Foglight’s deep monitoring on a broad scale of databases and infrastructure platforms makes it an essential element of hybrid ops and reducing hybrid costs.

Performance monitoring with Foglight – database, virtualization, storage

- The data pipeline is a key reason much of IT exists: without the ability to move data from one place to another, data becomes an expensive accessory.

Shorten MTTR using Foglight database and virtualization performance monitoring

Summary

Any mix of computing environments will introduce risk to the organization, but the days of monolithic databases are over. As your IT team grapples with multiple databases and hybrid cloud infrastructure, make sure you have the tools you need to monitor performance and quickly diagnose issues. This will dramatically decrease the risk of downtime and its associated effect on the business.

-

John.Pocknell

over 4 years ago

-

Cancel

-

Up

0

Down

-

-

Reply

-

More

-

Cancel

Comment-

John.Pocknell

over 4 years ago

-

Cancel

-

Up

0

Down

-

-

Reply

-

More

-

Cancel

Children